MIDIcut: An Approach to Editing Video with MIDI

I've mentioned it before, but I do not really get along with video editors. The software, not the profession. I can navigate my way around them enough to do very utilitarian cuts and assemblages, but I don't find the process intuitive at all. And it's not just one particular product; I've tried cutting in Final Cut, Premiere, Resolve, and iMovie. Most of the time, I end up doing my edits in Apple Motion–Apple's mostly forgotten (even to Apple) competitor to After Effects. I'd have to imagine I'm fairly alone in that workflow.

Part of my problem with video editing is how open-ended it is. It's fear of a blank page, in video form. On the one hand, I am glad that it is not so restrictive to be creatively stifling, but on the other, working within constraints or an established structure is a creative benefit to me. It's part of why I enjoy working in modular video so much. I can only patch as many modules as I physically own. Hell, I can only patch as many modules as I have patch cables.

Over a recent pad thai lunch, I had a bit of a revelation. In order to move video projects between different stages and workflows, the file formats are standardized and open. Final Cut exports to XML, for example. And when you have open file formats, you have the ability to generate these files programmatically. It should be fairly easy to lay out an edit in a better (or, at least, more familiar) tool and transfer that into a format that these editors can deal with. I no longer have to be beholden to the open-ended frustration of video editors.

As I'm chewing noodles, I started to brainstorm what a better tool would be. To me, editing is rhythm. Well, Digital Audio Workstations (DAWs) are pretty great at laying out rhythms. More than that, DAWs have a lot of tools for generating rhythms. Even more than that, these rhythms can be exported to one of the most standardized formats in the digital world: MIDI.

Pulling these ideas together, I have a gestational workflow that looks like this:

- Lay out the edit in a DAW

- Export the edit to a MIDI file

- Transform the MIDI into a timeline format that is readable by video editing software

- Make any final edits and tweaks from within the video editor

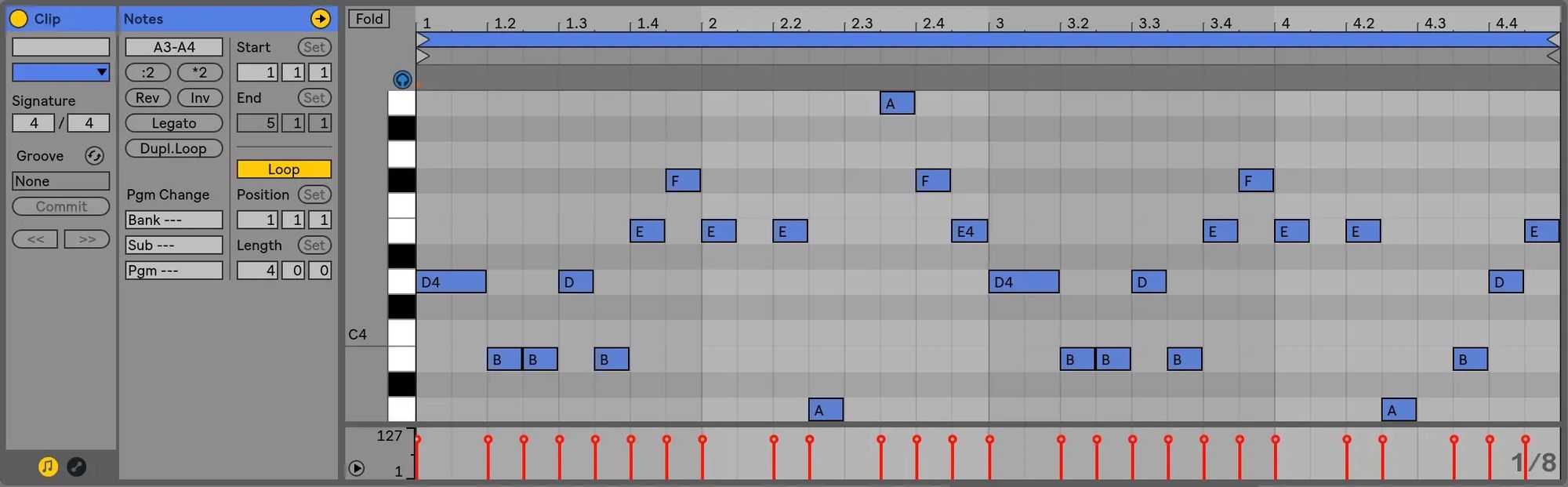

DAWs

Most DAWs represent a timeline using a piano roll format, with note values running along the vertical axis and time being the horizontal axis. This convention is much older than DAWs, having originated as a physical format for player pianos in the late 1800s.

If you imagine each note as a video clip, you can quickly see how an edit sequence maps across the piano roll.

My DAW of choice is Ableton Live. I have been using it for almost 20 years. DAW preference tends to be very personal, but here's why I like Live:

- It has a session view that allows you to work in smaller chunks than a full arrangement

- It even has a pencil tool to draw in notes where you want on the timeline

- It has integration with Cycling 74's Max, which allows for a lot of non-traditional and generative sequencers

I am really excited about that last point, in particular. Have you ever watched a video cut to Euclidean rhythms? Or what about a video cut to the physics of a bouncing ball?

Historic Precedent

Editing to rhythms isn't new, by any stretch. Even prior to sync sound, ambitious editors cut silent films using rhythms based on the number of frames between cuts. Once sync sound entered the picture, filmmakers took it a lot further.

For this approach, I'm specifically drawing from the work of Oskar Fischinger. In the 1930s he was creating these experimental, highly synced abstract animations (financed by MGM, no less).

In more recent history, the music videos of the 1980s and 90s increasingly used quick edits, often in time with the music to highlight the song.

Modern video editors have features for beat detection and creating sequences based on a beat, but I think that viewing this approach as purely for music-synced edits is selling it short. MIDI data is a lot more than just a tempo.

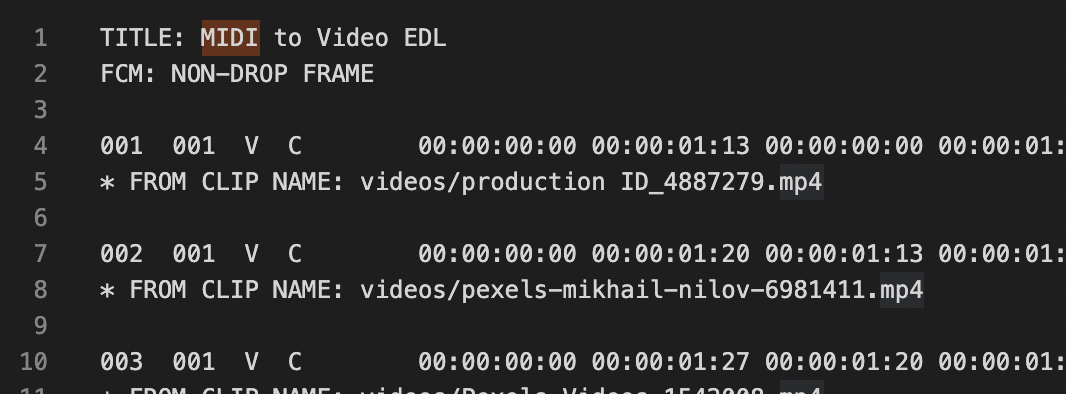

But first, we need to write a script to get that MIDI into a format we can use. There are a couple of formats that video editors can load for timeline information: CMX3600-formatted EDL (Edit Description List) files, Final Cut Pro XML files, and AAF (Advanced Authoring Format).

I had an idea of how to approach the code for this; there are plenty of MIDI libraries I can use to read the note information, but I'm not really familiar with any of these output formats. It turns out, I didn't need to be.

Enter GPT4

Okay, okay, I definitely used this idea as a test for the latest and greatest(?) large-language model. Early tests with GPT4 showed it able to write complete small applications and games with minimal prompting. Surely, it would be able to get me started on this little adventure.

I started with the following prompt:

Act as an AI programming assistant. I would like to write a script in python that translates MIDI files into Edit Description Lists (EDL) that can be loaded in the Divinci Resolve video editor. Input arguments are the MIDI file to be processed and directory of video files. At the start of the script, the video files are randomly assigned to each note in the scale. Whenever a note is triggered in the MIDI file, the assigned video clip is displayed for the length of the note. Based on that information, what other information do you need from me?

To which it responded:

To assist you in creating this script, I'll need some additional information:

- MIDI-to-EDL Mapping: What information from the MIDI file should be used to create the EDL file? For example, should the pitch, velocity, or duration of the notes in the MIDI file be mapped to specific properties in the EDL file, such as clip start times, playback speed, or clip length?

- Video Clips Specification: Do you want the video clips to be trimmed or resized according to the note duration? If so, should the video playback speed be adjusted to fit the note duration, or should the clip be cut short/extended with a freeze-frame effect?

- EDL Format: Divinci Resolve supports multiple EDL formats, such as CMX3600 and Final Cut Pro. Which specific EDL format would you like to use?

- Video Clips Naming: How should the video clips be named in the EDL file? Are there any specific naming conventions or formats that need to be followed?

- Overlapping Notes: How should the script handle overlapping notes in the MIDI file? Should the corresponding video clips be displayed sequentially, or should they be layered on top of each other with some transparency applied?

Once you provide this information, I can help you better with creating the Python script.

After I answered those questions, we were off and running. It selected the mido python library for reading MIDI information. It even understood the ins and outs of the CMX3600 file format without me having to provide documentation.

I had a couple false starts and some missteps because I tried to add too many features too quickly, but within a handful of responses from me, it had written a python script that did exactly what I wanted.

There is no doubt that this is really impressive technology. I think that this particular use case is ideal. I didn't have to spend a weekend familiarizing myself with the CMX3600 file format and all of the methods of the mido library. If I ran into trouble, I was going to have a hell of a time finding someone that was knowledgeable about both of these niche technologies to help me out. StackExchange wasn't going to help here. All I had to do was communicate my goals to GPT4 and it translated them into an executable script.

The Code

Here's the script:

import mido

import os

import random

import argparse

def frames_to_timecode(frames, fps=30):

ff = int(frames % fps)

ss = int((frames // fps) % 60)

mm = int((frames // (fps * 60)) % 60)

hh = int(frames // (fps * 60 * 60))

return f"{hh:02d}:{mm:02d}:{ss:02d}:{ff:02d}"

def process_midi_file(midi_file, video_dir):

# Load MIDI file

midi = mido.MidiFile(midi_file)

# Randomly assign video files to notes

note_to_video = {}

video_files = [f for f in os.listdir(video_dir) if f.endswith(('.mp4', '.mov', '.avi'))]

for note in range(21, 109):

note_to_video[note] = os.path.join(video_dir, random.choice(video_files))

# Process MIDI messages and generate EDL events

events = []

current_time = 0

for msg in midi:

current_time += msg.time

if msg.type == 'note_on' and msg.velocity > 0:

events.append((current_time, note_to_video[msg.note]))

return events

def create_video_edl(events, midi, output_edl, fps=30):

with open(output_edl, 'w') as f:

f.write("TITLE: MIDI to Video EDL\n")

f.write("FCM: NON-DROP FRAME\n\n")

clip_id = 1

for i, (start_time, video_file) in enumerate(events):

start_frames = int(start_time * fps)

src_in = frames_to_timecode(0, fps)

rec_in = frames_to_timecode(start_frames, fps)

if i + 1 < len(events):

next_start_time = events[i + 1][0]

duration_frames = int((next_start_time - start_time) * fps)

else:

duration_frames = int((midi.length - start_time) * fps)

rec_out = frames_to_timecode(start_frames + duration_frames, fps)

f.write(f"{clip_id:03} 001 V C {src_in} {rec_out} {rec_in} {rec_out}\n")

f.write(f"* FROM CLIP NAME: {video_file}\n\n")

clip_id += 1

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='Create video edit decision list (EDL) based on MIDI notes.')

parser.add_argument('midi_file', help='Path to the input MIDI file.')

parser.add_argument('video_dir', help='Path to the directory containing video files.')

parser.add_argument('output_edl', help='Path to the output EDL file.')

args = parser.parse_args()

midi_file = args.midi_file

video_dir = args.video_dir

output_edl = args.output_edl

midi = mido.MidiFile(midi_file)

events = process_midi_file(midi_file, video_dir)

create_video_edl(events, midi, output_edl)

You will need to make sure that you have mido installed first, so be sure to do a pip install mido first. After that, run it like this: python midicut.py midifile.mid path/to/videos edlfilename.edl. And that's it. You'll end up with an .edl file that you can load into your editor. In DaVinci Resolve, you do this from File > Import > Timeline.

Caveats

While MIDI files support multiple tracks (channels), this script assumes MIDI Format 0, in which all tracks are smooshed down into one track. If you want to do multiple tracks, just export multiple MIDI files and import them separately.

It helps if all of the frame rates involved (video clips used, video editor project, and EDL file) are the same. If you are trying to sync an edit to audio, you may get some sound sync issues with mixed frame rates.

I'd ideally like to be able to import all of the notes as separate tracks to make further tweaks a little easier, but after the wild side quest that I had with the AAF file format (it's a doozy), I'm going to rethink my approach. DAWs have no problems working with an unlimited amount of tracks, but video editors do not share that viewpoint. Trying to get Resolve to behave like Live was probably the wrong way to go.

Example & Conclusion

Here's a video I made using a MIDI file of Claude Debussy's The Little Shepherd and a bunch of stock footage from Pexels. One thing I like about the script and this approach is that the video selection is not completely random. Each note of the scale is randomly assigned a video, but what that means is that when the music returns to a note, it returns to the same video. That may not make a lot of sense written out, but it's very evident when you see it:

I have been stuck for a long time trying to figure out an editing workflow that works for me and how my brain thinks about longer-form content. But I think this is it. I know it's just stock footage above, but it's the edit and the rhythm of it that really sells it. If I can take this approach and apply it to my own video synthesis work, I think I will overcome a huge artistic barrier.